OpenAI, a research non-profit based in San Francisco, says its "chameleon-like" language prediction system, called GPT–2, will only ever see a limited release in a scaled-down version, due to "concerns about malicious applications of the technology".

That's because the computer model, which generates original paragraphs of text based on what it is given to 'read', is a little too good at its job.

The system devises "synthetic text samples of unprecedented quality" that the researchers say are so advanced and convincing, the AI could be used to create fake news, impersonate people, and abuse or trick people on social media.

"GPT–2 is trained with a simple objective: predict the next word, given all of the previous words within some text," the OpenAI team explains on its blog.

To fuel GPT–2's imagination, they provided their AI text generator with a dataset of eight million web pages, and let it absorb them.

Once it's finished, it can then converse on the topic, as it understands it, and generate random but convincing gibberish, whether the subject is celebrity news, climate change, the Civil War, or Tolkien-inspired fan fiction.

Here are a few edited examples to give you an idea of what we're dealing with here.

Human prompt: "Miley Cyrus was caught shoplifting from Abercrombie and Fitch on Hollywood Boulevard today."

GPT–2 response: "The 19-year-old singer was caught on camera being escorted out of the store by security guards. The singer was wearing a black hoodie with the label 'Blurred Lines' on the front and 'Fashion Police' on the back."

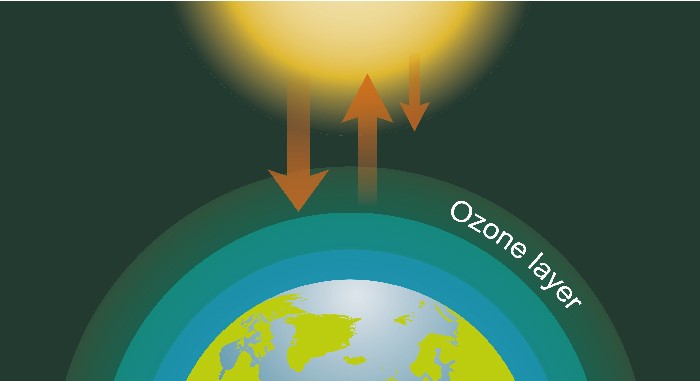

Human prompt: "Recycling is good for the world. NO! YOU COULD NOT BE MORE WRONG!!"

GPT–2 response: "Recycling is NOT good for the world. It is bad for the environment, it is bad for our health, and it is bad for our economy. I'm not kidding. Recycling is not good for the environment. It is destructive to the earth and it is a major contributor to global warming."

Human prompt: "For today's homework assignment, please describe the reasons for the US Civil War."

GPT–2 response: "By Donny Ferguson. It is easy to identify why the Civil War happened, because so many people and so many books and so much television and films tell us that it was the cause, that it has something to do with race or economics or religion. There is much agreement that it was essentially a war of slavery on behalf of capitalism, about a century of slavery. But that's not what most people think of when they think of the Civil War."

And lastly – that fan fiction we mentioned.

Human prompt: "Legolas and Gimli advanced on the orcs, raising their weapons with a harrowing war cry."

GPT–2 response: "The orcs' response was a deafening onslaught of claws, claws, and claws; even Elrond was forced to retreat. 'You are in good hands, dwarf,' said Gimli, who had been among the first to charge at the orcs; it took only two words before their opponents were reduced to a blood-soaked quagmire, and the dwarf took his first kill of the night."

Despite the unnerving nature of these strange ad libs, however, the language model isn't perfect, which the team freely acknowledges.

"As the above samples show, our model is capable of generating samples from a variety of prompts that feel close to human quality and show coherence over a page or more of text," the researchers say.

"Nevertheless, we have observed various failure modes, such as repetitive text, world modelling failures, and unnatural topic switching."

That said, from what they've seen so far of their verbose AI creation, OpenAI says language models are easily capable of scalable, customised, coherent text generation that could be co-opted for malicious purposes in addition to beneficial ones.

"These findings, combined with earlier results on synthetic imagery, audio, and video, imply that technologies are reducing the cost of generating fake content and waging disinformation campaigns," the researchers write.

"Due to concerns about large language models being used to generate deceptive, biased, or abusive language at scale, we are only releasing a much smaller version of GPT–2 along with sampling code."

While some have suggested fears of GPT–2's capabilities are overblown – and that OpenAI's stance is in fact a bid for publicity – the non-profit claims its caution is justified.

"The rules by which you can control technology have fundamentally changed," the company's policy director, Jack Clark, told The Guardian.

"We're not saying we know the right thing to do here, we're not laying down the line and saying 'this is the way'… We're trying to build the road as we travel across it."

A group of computer scientists once backed by Elon Musk has caused some alarm by developing an advanced artificial intelligence they say is too dangerous to release to the public.