For

science-fiction buffs, "artificial intelligence" conjures Star Trek-like speculations on how smart machines could be, whether they can ever love, laugh and demand justice, or whether robots will eventually take over the planet. But far from the flicker of movie screens, in standard laboratories and ordinary research papers, a quiet AI revolution has already taken place, and has profoundly transformed the way scientists look at the world, and how they view their own thoughts and acts.

The daring AI premise that anything the mind does, machines can do as well, has engendered scientific advances that were unimaginable under more conservative paradigms, which were inhibited by ideas of "what computers cannot do."

One such advance involved reasoning under uncertainty. In the early days of AI, dealing with uncertainty was considered a fundamental philosophical hurdle. How could a digital machine, it was asked, programmed to obey the rules of binary, true-and-false logic, ever be able to cope with the heavy fog of uncertainty that clouds ordinary daily tasks such as crossing a street, parking a car, reading a text or diagnosing a disease?

Cognitive psychologists who were studying reading comprehension in children gave AI scientists the clue. They noted that the speed at which humans read text could only be explained as a collaborative computation among a vast number of autonomous neural modules, each doing an extremely simple and repetitive task. They also realized that a huge body of knowledge is embodied in the way those modules are connected to each other, specifically, which ones communicate with which ones. Finally, it became apparent that a friendly "hand-shaking" must take place between top-down and bottom-up modes of thinking. In the blink of an eye, for example, we humans can use the meaning of a sentence to help disambiguate a word and, at the same time, use a recognized word to help disambiguate a sentence.

This neural hand-shaking immediately called into focus Bayesian inference--a theory that dates back to a 1763 paper by the British mathematician and minister Thomas Bayes (1702-1761). It dictates how prior beliefs should combine with new observations, so as to obtain an updated belief that accounts for both. Surely, if I believe an animal to be a cat and observe her barking, my belief should be updated--Bayes' theorem tells us how.

There was only one problem--size. The knowledge needed for even the simplest belief-updating task required an enormous memory space if encoded by standard Bayesian analysis. Similarly, the computation needed for properly absorbing each piece of evidence demanded an unreasonable amount of time.

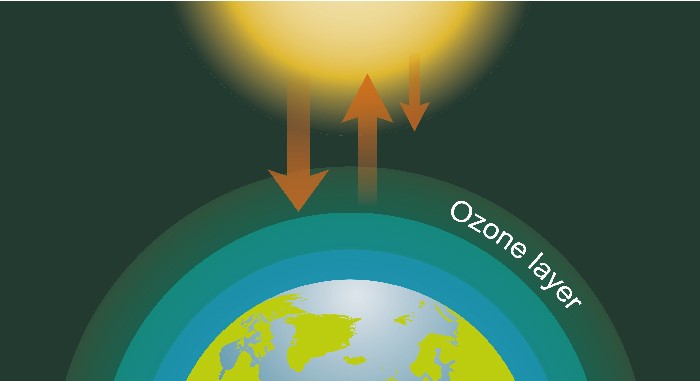

The solution that emerged from observing the way children read was cast in the form of Bayesian Networks--diagrams of local dependencies, in which, for example, "smoke" depends on "fire" but not on "prayers." The diagrams divide the world into a society of loosely connected concepts. This division offers economical encoding of an agent's prior beliefs, and also defines the pathways along which beliefs are updated in response to new observations--for example, observing "smoke" makes "fire" more likely.

Theoreticians then noted that, under certain conditions, if we assign a processor to each variable in the diagram and allow that processor to communicate solely with its neighbors, a stable state will eventually be reached, in which each variable acquires its correct degree of belief.

Bayesian networks are today's standard method for handling uncertainty in computer systems, processing thousands of variables and millions of observations.

Their applications range from medical diagnosis, homeland security and genetic counseling to natural language understanding, search engines and mapping gene expression data. Perhaps the best-known example is Microsoft's Office Assistant, which determines users' problems and intentions--sometimes inaccurately--on the basis of users' actions and queries.

Development of these applications was driven by blind faith in the AI paradigm: "If humans can do a task swiftly and reliably, so should machines." Unexpectedly, however, the theoretical efforts spent on understanding the relations between diagrams and probabilities, two seemingly unrelated mathematical objects, sparked a revolution in another area of inquiry: Causality. Special versions of Bayesian networks, as it turned out, can manage causal and counterfactual relationships as well.

There is hardly any scientific field that does not deal with cause and effect. Whether we are evaluating the impact of an educational program or running experiments on mice, we are dealing with a tangled web of cause-effect considerations.

And yet, though it is basic to human thought, causality is a notion shrouded in mystery and controversy. We all understand that the rooster's crow does not cause the sun to rise, but even this simple fact cannot easily be expressed in a mathematical formula (skeptics are invited to try).

Lacking mathematical tools, the analysis of cause and effect became a source of unending paradoxes and lingering embarrassments. For example, we may find that students who smoke obtain higher grades than those who do not smoke; but after we adjust for age, smokers obtain lower grades than nonsmokers in every age group; still, after adjusting for family income, smokers obtain higher grades than nonsmokers in every income-age group; and so on. Despite a century of analysis, this problem of "confounding,"--that is, determining which factors should be adjusted for in any given study--continued for many years to be decided informally, with the decision resting on folklore and intuition rather than on scientific principles.

The mathematical and conceptual tools that emerged from the analysis of Bayesian networks have put such questions to rest and dramatically changed the way causality is treated in philosophy, statistics, epidemiology, economics and, of course, psychology and robotics. Today, we understand precisely the conditions under which causal relationships can be inferred from data. We understand the assumptions and measurements needed for predicting the effect of interventions (such as social policies) on outcomes (such as crime rates). And, not least, we understand how counterfactual sentences, like "I would have been better off had I not taken that drug," can be reasoned out algorithmically or inferred from data.

Counterfactual statements are the building blocks of scientific thought and moral behavior. The ability to reflect back on one's actions and envision alternative scenarios is the basis of free will, introspective learning, personal responsibility and social adaptation. Equipping machines with such abilities is therefore necessary for achieving cooperative behavior among robots and humans. The algorithmization of counterfactuals now brings us a giant step closer to understanding why evolution has endowed humans with the illusion of free will, and how it manages to keep that illusion so vivid in our brain.